Are you setting the right expectations for your content and programs?

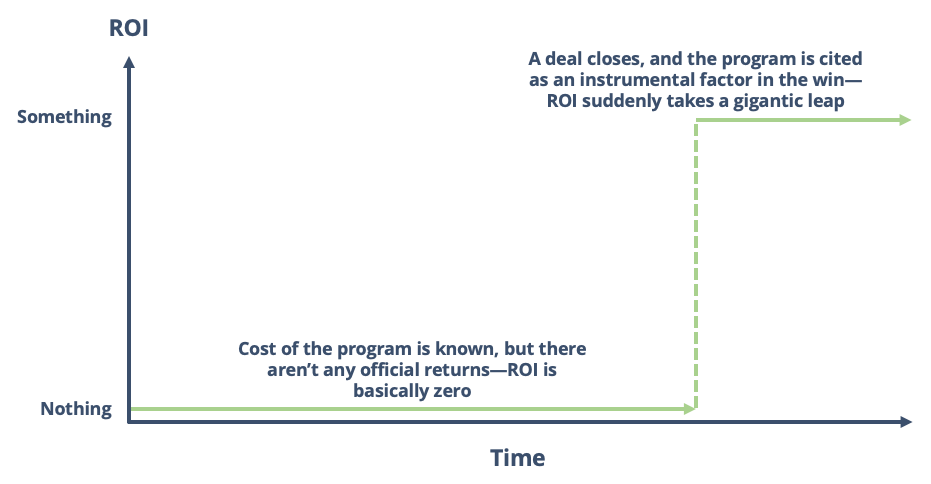

In terms of financial ROI, we were basically cruising along at nothing for a while, until suddenly jumping to 80,000%.

Yesterday, I was chatting with a client about an upcoming campaign that’ll feature some Cromulent-produced content. This campaign will promote technical material to a very specific audience. Essentially, it aims both to educate prospects about the nature of some specific problems of traditional solutions and to explain how our client’s solution overcomes these issues.

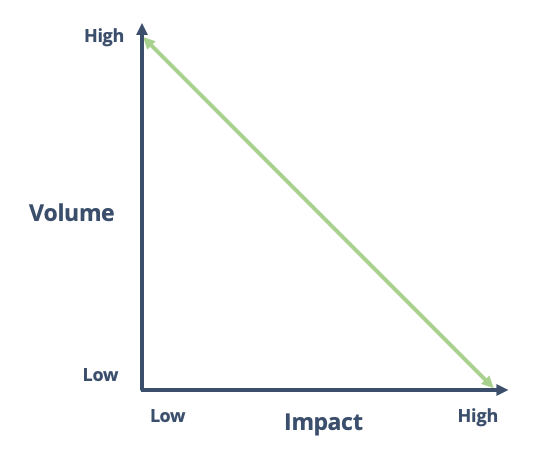

Volume vs Impact (vs Expectations)

During the conversation, I learned that this campaign is our client’s first real foray into promoting technical marketing assets. We chatted about what to expect; in a nutshell:

- This campaign is a low-volume/high-impact activity, which has important implications for how success should be measured

- In particular, success will likely come in the form of accelerating particular deals, overcoming buying barriers, protecting margins, etc.; these things are tough to measure, unlike volumetric counts that are relatively straightforward and automatic

- Most people think about marketing in terms of high-volume/low-impact activities that provide plenty of numbers, conversion rates, and so on

- This gap between the nature of this campaign and most people’s ideas about marketing risks creating misguided and unrealistic expectations

Volume vs Impact

While this point is dangerously broad, the relationship between volume of a ‘thing’ and its overall impact is usually:

That is to say, things that:

- You do a lot of (high unit volume) tend to have a very low unit impact—e.g., email blasts

- Make a difference per unit (high impact) tend not to lend themselves to high volume—e.g., technical whitepapers

- Are sorta useful (medium impact) can be done in some not-too-big, but not-too-small quantity—e.g., webinars

(of course, it’s perfectly possible to deviate from the diagram in a very negative way—say, by investing resources into a low-volume activity that ends up being ineffective)

There’s a relatively small audience in the world for this material, but that audience cares an awful lot

Our client’s market, in general—and the content and goals of this campaign, in particular—definitely fall on the low-volume/high-impact end of the distribution: there’s a relatively small audience in the world for this material, but that audience cares an awful lot. Done right, this content can make a very big difference.

This scenario is very familiar to me. Back in the day, I helped create a comprehensive technical content library that performed very highly relative to our competitors’. Our markets perceived us as the superior option and the most trustworthy vendor. More concretely, this content was instrumental—critical, even, in a few cases—in winning multi-million dollar deals.

Interested in technical marketing? Then you might want to check out Tackling Technical Topics. It outlines a model for, um, tackling technical topics, and goes through an example from my past.

Expectations

Things that are precisely measured are rewarded disproportionally relative to impact.

Theo Epstein, President of the Chicago Cubs

However, until those deals closed and associated customer anecdotes and testimonials about the importance of the technical material came in, measuring the impact of building out our technical content was tough. In terms of financial ROI, we were basically cruising along at nothing for a while, until suddenly jumping to 80,000%.

It’s a classic step function, for you engineering nerds out there.

An alternative is to design and implement a pretty robust multi-touch attribution model; it’ll help you assign a dollar value (or some other value) to material throughout the sales process. But that’s tough to do, so most companies don’t do it.

Longtime readers will recall that Matt Trushinksi of Miovision joined us for a podcast in which he spoke a bit about their well-developed attribution model—I’m happy to write that the model showed a great ROI for the technical showcase we wrote for their World’s Smartest Intersection campaign.

Unfortunately, most marketing discussions tend to involve high-volume/low-impact activities. A straightforward example is an email campaign: you send out a few thousand emails, and a few percent get opened, and some subset of those become qualified leads (or achieve some other milestone). Even at low conversions, the volume means you can immediately report back impressive-sounding numbers, and you’ve got a sample size sufficient to reach some informed conclusions.

From my own experience going to many, many marketing peer-to-peer groups and meetups, I can report that that most of the discussion centres on high-volume/low-impact initiatives. The natural result of this emphasis is that, within an organization, expectations can be wildly out of line, because people are conditioned to think only in terms of high-volume/low-impact.

For instance, in my previous world, our most popular papers might get downloaded 30 times a month; others, only 3 or 4 times. By those measures, the impact was questionable or negligible; had we relied on hits to our landing pages, or downloads of our content, to evaluate the success of our technical marketing program, then we might have abandoned the strategy before it had the chance to win deals. Fortunately, the organization trusted that I knew what I was doing, so we kept the program going—and the value became obvious over time.

Realistically, our client shouldn’t expect this technical marketing campaign to generate piles of leads, thousands of hits, etc.—and that’s OK.

So my advice to my client was to be mindful of setting appropriate expectations within the company for the upcoming campaign—everyone’s excited (which is great!), but excitement can unintentionally inflate or distort expectations.

Realistically, our client shouldn’t expect this technical marketing campaign to generate piles of leads, thousands of hits, etc.—and that’s OK. Instead, it’s reasonable to expect that the upcoming papers will have very significant impact within particular opportunities.

Success, in this case, comes not in terms of landing page hits and paper downloads, but through deal acceleration/milestones, time saved (i.e., don’t need the CTO to jump on a call with each prospect), value defended, etc.

But because these meaningful things are much tougher to measure, organizations have a tendency to fall back on the easily counted stuff. For instance: you need to have frequent conversations with account teams or channel partners; you need to conduct win/loss analysis; you need to know what was holding up a deal in the first place, etc. And it’s rarely as clean as someone saying, “Paper X convinced us to buy your solution” (although it’s incredibly satisfying when that does happen).

Of course, the high-volume/low-impact scenario can also distort expectations: make sure people don’t expect gigantic per-unit results; instead, you feel the results of these activities in aggregate.

Summing Up…

In summary:

- It’s important that you understand the nature of your content and related activities: high-volume/low-impact, low-volume/high-impact, or somewhere in-between?

- This understanding should inform legitimate measures of success—in some cases, success will only be measurable over a longer term and in different ways than many organizations are accustomed to

- Do your best to help other stakeholders understand the nature and to develop realistic expectations; usually, a misguided expectation is just the result of a misunderstanding about the nature of an activity

—

Header/featured image: Photo by Elena Koycheva on Unsplash